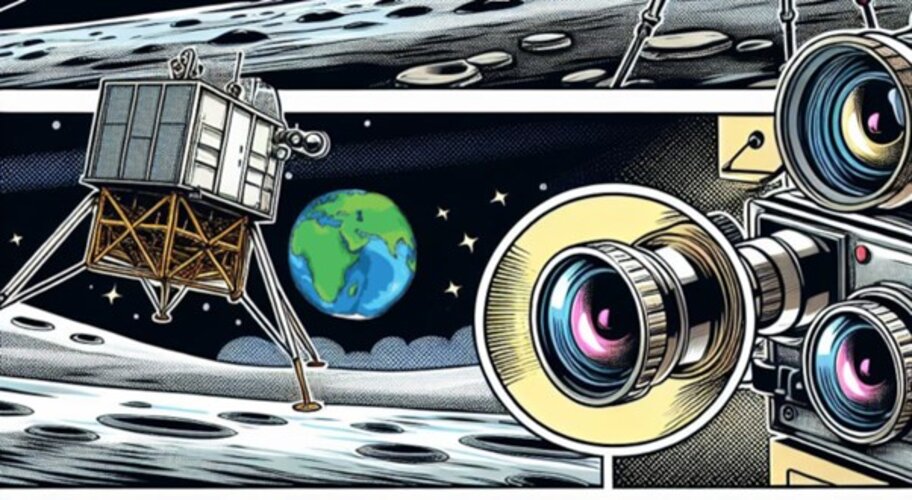

ACT’s Pietro Fanti explains: “Instead of capturing colour images using the RGB (red, green, and blue) channels like traditional cameras, an event camera records changes in brightness intensity for each pixel.

“This allows us to capture data at a very high speed and even in challenging lighting conditions, such as scenes with very bright or very dark areas. In addition, the sensor has lower power consumption when compared to conventional cameras.”

Dario adds: “These qualities make event cameras a great tool for navigation during Moon landings. To explore this application, we have generated a large number of landing trajectories, and then we simulated the event data that an event camera fixed to a lunar lander would capture in that situation. Now we are asking the community to navigate our imaginary spacecraft through a safe, highly precise landing.”

“To generate data for this project, we used PANGU – a space video simulations software developed for ESA by the University of Dundee,” says ACT’s Leon Williams. “These simulated videos were then converted into event streams using video2events software made by ETH Zürich.”