When you travel somewhere where they speak a language you can't understand, it's usually important to find a way to translate what's being communicated to you. In some ways, the same can be said about scientific data collected from cosmic objects.

A telescope like NASA's Chandra X-ray Observatory captures X-rays, which are invisible to the human eye, from sources across the cosmos. Similarly, the James Webb Space Telescope captures infrared light, also invisible to the human eye. These different kinds of light are transmitted down to Earth, packed up in the form of ones and zeroes. From there, the data are transformed into a variety of formats—from plots to spectra to images.

This last category—images—is arguably what telescopes are best known for. For most of astronomy's long history, however, most people who are blind or have low vision (BLV) have not been able to experience the data that these telescopes have captured fully.

NASA's Universe of Sound data sonification program, with NASA's Chandra X-ray Observatory and NASA's Universe of Learning, translates visual data of objects in space into sonified data. All telescopes—including Chandra, Webb, the Hubble Space Telescope, plus dozens of others—in space need to send the data they collect back to Earth as binary code or digital signals.

Typically, astronomers and others turn these digital data into images, which are often spectacular and make their way into everything from websites to pillowcases.

The music of the spheres

By taking these data through another step, however, experts on this project mathematically map the information into sound. This data-driven process is not a reimagining of what the telescopes have observed; it is yet another kind of translation. Instead of a translation from French to Mandarin, it's a translation from visual to sound.

Releases from the Universe of Sound sonification project have been immensely popular with non-experts, from viral news stories with over two billion people potentially reached, according to press metrics, to triple the usual Chandra.si.edu website traffic.

But how are such data sonifications perceived by people, particularly members of the BLV community? How do data sonifications affect participant learning, enjoyment, and exploration of astronomy? Can translating scientific data into sound help enable trust or investment, emotionally or intellectually, in scientific data? Can such sonification help improve awareness of accessibility needs that others might have?

Listening closely

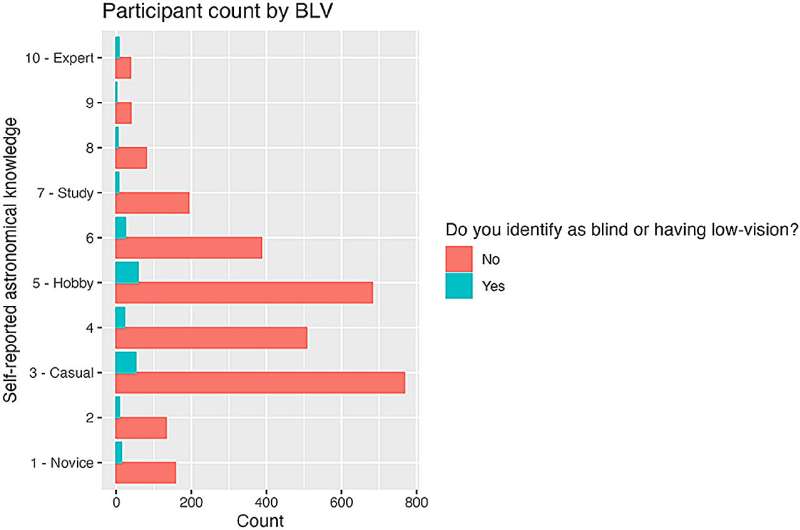

This study used our sonified NASA data of three astronomical objects. We surveyed blind or low-vision and sighted individuals to understand participant experiences of the sonification better, relating to their enjoyment, understanding, and trust of the scientific data. Data analyses from 3,184 sighted blind or low-vision participants yielded significant self-reported learning gains and positive experiential responses.

The results showed that astrophysical data engaging multiple senses, like sonification, could establish additional avenues of trust, increase access, and promote awareness of accessibility in sighted and blind or low-vision communities. In short, sonifications helped people access and engage with the universe.

Sonification is an evolving and collaborative field. It is a project not only done for the BLV community, but with BLV partnerships. A new documentary available on NASA's free streaming platform NASA+ explores how these modifications are made and the team behind them. The hope is that sonifications can help communicate the scientific discoveries from our universe with more audiences and open the door to the cosmos just a little wider for everyone.

The findings are published in the journal Frontiers in Communication.

More information: Kimberly Kowal Arcand et al, A Universe of Sound: processing NASA data into sonifications to explore participant response, Frontiers in Communication (2024). DOI: 10.3389/fcomm.2024.1288896

Provided by Frontiers